[Insert Clickbait Headline About Progressive Enhancement Here]

Late last week, Josh Korr, a project manager at Viget, posted at length about what he sees as a fundamental flaw with the argument for progressive enhancement. In reading the post, it became clear to me that Josh really doesn’t have a good grasp on progressive enhancement or the reasons its proponents think it’s a good philosophy to follow. Despite claiming to be “an expert at spotting fuzzy rhetoric and teasing out what’s really being said”, Josh makes a lot of false assumptions and inferences. My response would not have fit in a comment, so here it is…

Before I dive in, it’s worth noting that Josh admits that he is not a developer. As such, he can’t really speak to the bits where the rubber really meets the road with respect to progressive enhancement. Instead, he focuses on the argument for it, which he sees as a purely moral one… and a flimsily moral one at that.

I’m also unsure as to how Josh would characterize me. I don’t think I fit his mold of PE “hard-liners”, but since I’ve written two books and countless articles on the subject and he quotes me in the piece, I’ll go out on a limb and say he probably thinks I am.

Ok, enough with the preliminaries, let’s jump over to his piece…

Right out of the gate, Josh demonstrates a fundamental misread of progressive enhancement. If I had to guess, it probably stems from his source material, but he sees progressive enhancement as a moral argument:

It’s a moral imperative that everything on the web should be available to everyone everywhere all the time. Failing to achieve — or at least strive for — that goal is inhumane.

Now he’s quick to admit that no one has ever explicitly said this, but this is his takeaway from the articles and posts he’s read. It’s a pretty harsh, black & white, you’re either with us or against us sort of statement that has so many people picking sides and lobbing rocks and other heavy objects at anyone who disagrees with them. And everyone he quotes in the piece as examples of why he thinks this is progressive enhancement’s central conceit is much more of an “it depends” sort of person.

To clarify, progressive enhancement is neither moral or amoral. It’s a philosophy that recognizes the nature of the Web as a medium and asks us to think about how to build products that are robust and capable of reaching as many potential customers as possible. It isn’t concerned with any particular technology, it simply asks that we look at each tool we use with a critical eye and consider both its benefits and drawbacks. And it’s certainly not anti-JavaScript.

I could go on, but let’s circle back to Josh’s piece. Off the bat he makes some pretty bold claims about what he intends to prove in this piece:

- Progressive enhancement is a philosophical, moral argument disguised as a practical approach to web development.

- This makes it impossible to engage with at a practical level.

- When exposed to scrutiny, that moral argument falls apart.

- Therefore, if PEers can’t find a different argument, it’s ok for everyone else to get on with their lives.

For the record, I plan to address his arguments quite practically. As I mentioned, progressive enhancement is not solely founded on morality, though that can certainly be viewed as a facet. The reality is that progressive enhancement is quite pragmatic, addressing the Web as it exists not as we might hope that it exists or how we experience it.

Over the course of a few sections—which I wish I could link to directly, but alas, the headings don’t have unique ids—he examines a handful of quotes and attempts to tease out their hidden meaning by following the LSAT’s Logic Reasoning framework. We’ll start with the first one.

Working without JavaScript

Statement

- “When we write JavaScript, it’s critical that we recognize that we can’t be guaranteed it will run.” — Aaron Gustafson

- “If you make your core tasks dependent on JavaScript, some of your potential users will inevitably be left out in the cold.” — Jeremy Keith

Unstated assumptions:

- Because there is some chance JavaScript won’t run, we must always account for that chance.

- Core tasks can always be achieved without JavaScript.

- It is always bad to ignore some potential users for any reason.

His first attempt at teasing out the meaning of these statements comes close, but ignores some critical word choices. First off, neither Jeremy nor I speak in absolutes. As I mentioned before, we (and the other folks he quotes) all believe that the right technical choices for a project depend on specifically on the purpose and goals of that specific project. In other words it depends. We intentionally avoid absolutist words like “always” (which, incidentally, Josh has no problem throwing around, on his own or on our behalf).

For the development of most websites, the benefits of following a progressive enhancement philosophy far outweigh the cost of doing so. I’m hoping Josh will take a few minutes to read my post on the true cost of progressive enhancement in relation to actual client projects. As a project manager, I hope he’d find it enlightening and useful.

It’s also worth noting that he’s not considering the reason we make statements like this: Many sites rely 100% on JavaScript without needing to. The reasons why sites (like news sites, for instance) are built to be completely reliant on a fragile technology is somewhat irrelevant. But what isn’t irrelevant is that it happens. Often. That’s why I said “it’s critical that we recognize that we can’t be guaranteed it will run” (emphasis mine). A lack of acknowledgement of JavaScript’s fragility is one of the main problems I see with web development today. I suspect Jeremy and everyone else quoted in the post feels exactly the same. To be successful in a medium, you need to understand the medium. And the (sad, troubling, interesting) reality of the Web is that we don’t control a whole lot. We certainly control a whole lot less than we often believe we do.

As I mentioned, I disagree with his characterization of the argument for progressive enhancement being a moral one. Morality can certainly be one argument for progressive enhancement, and as a proponent of egalitarianism I certainly see that. But it’s not the only one. If you’re in business, there are a few really good business-y reasons to embrace progressive enhancement:

- Legal: Progressive enhancement and accessibility are very closely tied. Whether brought by legitimate groups or opportunists, lawsuits over the accessibility of your web presence can happen; following progressive enhancement may help you avoid them.

- Development Costs: As I mentioned earlier, progressive enhancement is a more cost-effective approach, especially for long-lived projects. Here’s that link again: The True Cost of Progressive Enhancement.

- Reach: The more means by which you enable users to access your products, information, etc., the more opportunities you create to earn their business. Consider that no one thought folks would buy big-ticket items on mobile just a few short years ago. Boy, were they wrong. Folks buy cars, planes, and more from their tablets and smartphones on the regular these days.

- Reliability: When your site is down, not only do you lose potential customers, you run the risk of losing existing ones too. There have been numerous incidents where big sites got hosed due to JavaScript dependencies and they didn’t have a fallback. Progressive enhancement ensures users can always do what they came to your site to do, even if it’s not the ideal experience.

Hmm, no moral arguments for progressive enhancement there… but let’s continue.

Some experience vs. no experience

Statement

- “[With a PE approach,] Older browsers get a clunky experience with full page refreshes, but that’s still much, much better than giving them nothing at all.” — Jeremy Keith

- “If for some reason JavaScript breaks, the site should still work and look good. If the CSS doesn’t load correctly, the HTML content should still be there with meaningful hyperlinks.” — Nick Pettit

Unstated assumptions:

- A clunky experience is always better than no experience.

- HTML content — i.e. text, images, unstyled forms — is the most important part of most websites.

You may be surprised to hear that I have no issue with Josh’s distillation here. Clunky is a bit of a loaded word, but I agree that an experience is better than no experience, especially for critical tasks like checking your bank account, registering to vote, making a purchase from an online shop. In my book, I talk a little bit about a strange thing we experienced when A List Apart stopped delivering CSS to Netscape Navigator 4 way back in 2001:

We assume that those who choose to keep using 4.0 browsers have reasons for doing so; we also assume that most of those folks don’t really care about “design issues.” They just want information, and with this approach they can still get the information they seek. In fact, since we began hiding the design from non–compliant browsers in February 2001, ALA’s Netscape 4 readership has increased, from about 6% to about 11%.

Folks come to our web offerings for a reason. Sometimes its to gather information, sometimes it’s to be entertained, sometimes it’s to make a purchase. It’s in our best interest to remove every potential obstacle that can preclude them from doing that. That’s good customer service.

Project priorities

Statement

- “Question any approach to the web where fancy features for a few are prioritized & basic access is something you’ll ‘get to’ eventually.” — Tim Kadlec

Unstated assumptions:

- Everything beyond HTML content is superfluous fanciness.

- It’s morally problematic if some users cannot access features built with JavaScript.

Not to put words in Tim’s mouth (like Josh is here), but what Tim’s quote is discussing is hype-driven (as opposed to user-centered) design. We (as developers) often prioritize our own convenience/excitement/interest over our users’ actual needs. It doesn’t happen all the time (note I said often), but it happens frequently enough to require us to call it out now and again (as Tim did here).

As for the “unstated assumptions”, I know for a fact that Tim would never call “everything beyond HTML” superfluous. What he is saying is that we should question—as in weigh the pros and cons—of each and every design pattern and development practice we consider. It’s important to do this because there are always tradeoffs. Some considerations that should be on your list include:

- Download speed;

- Time to interactivity;

- Interaction performance;

- Perceived performance;

- Input methods;

- User experience;

- Screen size & orientation;

- Visual hierarchy;

- Aesthetic design;

- Contrast;

- Readability;

- Text equivalents of rich interfaces for visually impaired users and headless UIs;

- Fallbacks; and

- Copywriting.

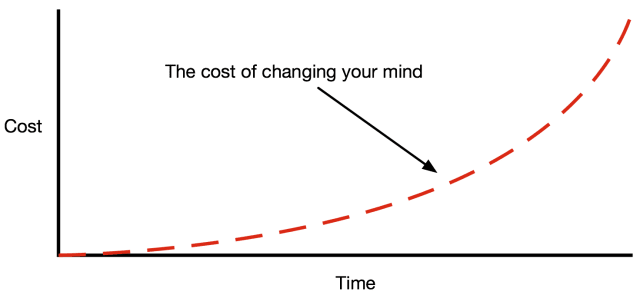

This list is by no means exhaustive nor is it in any particular order; it’s what came immediately to mind for me. Some interfaces may have fewer or more considerations as each is different. And some of these considerations might be in opposition to others depending on the interface. It’s critical that we consider the implications of our design decisions by weighing them against one another before we make any sort of decision about how to progress. Otherwise we open ourselves up to potential problems and the cost of changing things goes up the further into a project we are:

As a project manager, I’m sure Josh understands this reality.

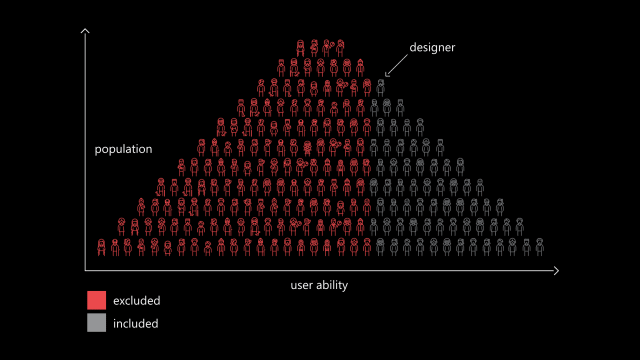

As to the “morally problematic” bit, I’ll refer back to my earlier discussion of business considerations. Sure, morality can certainly be part of it, but I’d argue that it’s unwise to make assumptions about your users regardless. It’s easy to fall into the trap of thinking that all of or users are like us (or like the personas we come up with). My employer, Microsoft, makes a great case for why we should avoid doing this in their Inclusive Design materials:

If you’re in business, it doesn’t pay to exclude potential customers (or alienate current ones).

Erecting unnecessary barriers

Statement

- “Everyone deserves access to the sum of all human knowledge.” — Nick Pettit

- “[The web is] built with a set of principles that — much like the principles underlying the internet itself — are founded on ideas of universality and accessibility. ‘Universal access’ is a pretty good rallying cry for the web.” — Jeremy Keith

- “The minute we start giving the middle finger to these other platforms, devices and browsers is the minute where the concept of The Web starts to erode. Because now it’s not about universal access to information, knowledge and interactivity. It’s about catering to the best of breed and leaving everyone else in the cold.” — Brad Frost

Unstated assumptions:

- What’s on the web comprises the sum of human knowledge.

- Progressive enhancement is fundamentally about universal access to this sum of human knowledge.

- It is always immoral if something on the web isn’t available to everyone.

I don’t think anyone quoted here would argue that the Web (taken in its entirety) is “the sum of all human knowledge”—Nick, I imagine, was using that phrase somewhat hyperbolically. But there is a lot of information on the Web folks should have access too, whether from a business standpoint or a legal one. What Nick, Jeremy, and Brad are really highlighting here is that we often make somewhat arbitrary design & development decisions that can block access to useful or necessary information and interactions.

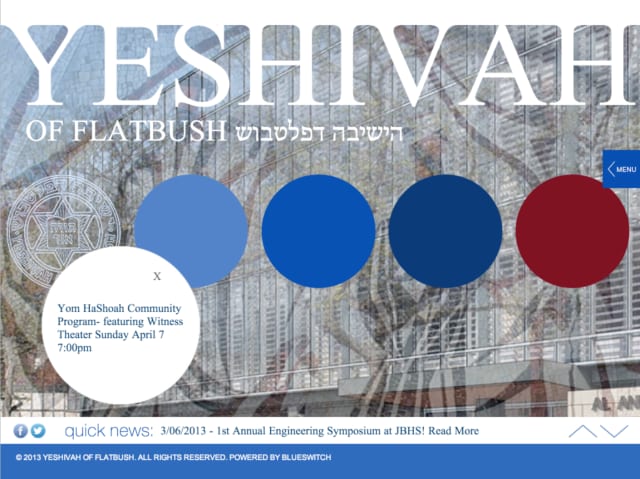

In my talk Designing with Empathy (slides), I discussed “mystery meat” navigation. I can’t imagine any designer sets out to make their site difficult to navigate, but we are influenced by what we see (and are inspired by) on the web. Some folks took inspiration from web-based art projects like this Toyota microsite:

Though probably not directly influenced by On Toyota’s Mind, Yeshiva of Flatbush was certainly influenced by the concept of “experiential” (which is a polite way of saying “mystery meat”) navigation.

That’s a design/UX example, but development is no different. How many Single Page Apps have you see out there that really didn’t need to be built that way? Dozens? We often put the cart before the horse and decide to build a site using a particular stack or framework without even considering the type of content we’re dealing with or whether that decision is in the best interest of the project or its end users. That goes directly back to Tim’s earlier point.

Progressive enhancement recognizes that experience is a continuum and we all have different needs when accessing the Web. Some are permanent: Low vision or blindness. Some are temporary: Imprecise mousing due to injury. Others are purely situational: Glare when your users are outside on a mobile device or have turned their screen brightness down to conserve battery. When we make our design and development decisions in the service of the project and the users who will access it, everyone wins.

Real answers to real questions

In the next section, Josh tries to say we only discuss progressive enhancement as a moral imperative. Clearly I don’t (and would go further to say no one else who was quoted does either). He argues that ours is “a philosophical argument, not a practical approach to web development”. I call bullshit. As I’ve just discussed in the previous sections, progressive enhancement is a practical, fiscally-responsible, developmentally robust philosophical approach to building for the Web.

But let’s look at some of the questions he says we don’t answer:

“Wait, how often do people turn off JavaScript?”

Folks turning off JavaScript isn’t really the issue. It used to be, but that was years ago. I discussed the misconception that this is still a concern a few weeks ago. The real issue is whether or not JavaScript is available. Obviously your project may vary, but the UK government pegged their non-JavaScript usage at 1.1%. The more interesting bit, however, was that only 0.2% of their users fell into the “Javascript off or no JavaScript support” camp. 0.9% of their users should have gotten the JavaScript-based enhancement on offer, but didn’t. The potential reasons are myriad. JavaScript is great, but you can’t assume it’ll be available.

“I’m not trying to be mean, but I don’t think people in Sudan are going to buy my product.”

This isn’t really a question, but it is the kinda thing I hear every now and then. An even more aggressive and ill-informed version I got was “I sell TVs; blind people don’t watch TV”. As a practical person, I’m willing to admit that your organization probably knows its market pretty well. If your products aren’t available in certain regions, it’s probably not worth your while to cater to folks in that region. But here’s some additional food for thought:

- When you remove barriers to access for one group, you create opportunities for others. A perfect example of this is the curb cut. Curb cuts were originally created to facilitate folks in wheelchairs getting across the road. In creating curb cuts, we’ve also enabled kids to ride bicycles more safely on the sidewalk, delivery personnel to more easily move large numbers of boxes from their trucks into buildings, and parents to more easily cross streets with a stroller. Small considerations for one group pay dividends to more. What rational business doesn’t want to enable more folks to become customers?

- Geography isn’t everything. I’m not as familiar with specific design considerations for Sudanese users, but since about 97% of Sudanese people are Muslim, let’s tuck into that. Ignoring translations and right-to-left text, let’s just focus on cultural sensitivity. For instance, a photo of a muscular, shirtless guy is relatively acceptable in much of the West, but would be incredibly offensive to a traditional Muslim population. Now your target audience may not be 100% Muslim (nor may your content lend itself to scantily-clad men), but if you are creating sites for mass consumption, knowing this might help you art direct the project better and build something that doesn’t offend potential customers.

Reach is incredibly important for companies and is something the Web enables quite easily. To squander that—whether intentionally or not—would be a shame.

Failures of understanding

Josh spends the next section discussing what he views as failures of the argument for progressive enhancement. He’s of course, still debating it as a purely moral argument, which I think I’ve disproven at this point, but let’s take a look at what he has to say…

The first “fail” he casts on progressive enhancement proponents is that we “are wrong about what’s actually on the Web.” Josh offers three primary offerings on the Web:

- Business and personal software, both of which have exploded in use now that software has eaten the world and is accessed primarily via the web

- Copyrighted news and entertainment content (text, photos, music, video, video games)

- Advertising and marketing content

This is the fundamental issue with seeing the Web only through the lens of your own experience. Of course he would list software as the number one thing on the Web—I’m sure he uses Basecamp, Harvest, GitHub, Slack, TeamWork, Google Docs, Office 365, or any of a host of business-related Software as a Service offerings every day. As a beneficiary of fast network speeds, I’m not at all surprised that entertainment is his number two: Netflix, Hulu, HBO Go/Now… It’s great to be financially-stable and live in the West. And as someone who works at a web agency, of course advertising would be his number three. A lot of the work Viget, and most other agencies for that matter, does is marketing-related; nothing wrong with that. But the Web is so much more than this. Here’s just a fraction of the stuff he’s overlooked:

- eCommerce,

- Social media,

- Banks,

- Governments,

- Non-profits,

- Small businesses,

- Educational institutions,

- Research institutions,

- Religious groups,

- Community organizations, and

- Forums.

It’s hard to find figures on anything but porn—which incidentally accounts for somewhere between 4% and 35% of the Web, depending on who you ask—but I have to imagine that these categories he’s overlooked probably account for the vast majority of “pages” on the Web even if they don’t account for the majority of traffic on it. Of course, as of 2014, the majority of traffic on the Web was bots, so…

The second “fail” he identifies is that our “concepts of universal access and moral imperatives… make no sense” in light of “fail” number one. He goes on to provide a list of things he seems to think we want even though advocating for progressive enhancement (and even universal access) doesn’t mean advocating for any of these things:

- All software and copyrighted news/entertainment content accessed via the web should be free. and Netflix, Spotify, HBO Now, etc. should allow anyone to download original music and video files because some people don’t have JavaScript. I’ve never heard anyone say that… ever. Advocating a smart development philosophy doesn’t make you anti-copyright or against making money.

- Any content that can’t be accessed via old browsers/devices shouldn’t be on the web in the first place. No one made that judgement. We just think it behooves you to increase the potential reach of your products and to have a workable fallback in case the ideal access scenario isn’t available. You know, smart business decisions.

- Everything on the web should have built-in translations into every language. This would be an absurd idea given that the number of languages in use on this planet top 6,500. Even if you consider that 2,000 of those have less than 1,000 speakers it’s still absurd. I don’t know anyone who would advocate for translation to every language.1

- Honda needs to consider a universal audience for its marketing websites even though (a) its offline advertising is not universal, and (b) only certain people can access or afford the cars being advertised. To you his first point, Honda does actually offline advertising in multiple languages. They even issue press releases mentioning it: “The newspaper and radio advertisements will appear in Spanish or English to match the primary language of each targeted media outlet.” As for his second argument… making assumptions about target audience and who can or cannot afford your product seems pretty friggin’ elitist; it’s also incredibly subjective. For instance, we did a project for a major investment firm where we needed to support Blackberry 4 & 5 even though there were many more popular smartphones on the market. The reason? They had several high-dollar investors who loved their older phones. You can’t make assumptions.

- All of the above should also be applied to offline software, books, magazines, newspapers, TV shows, CDs, movies, advertising, etc. Oh, I see, he’s being intentionally ridiculous.

I’m gonna skip the third fail since it presumes morality is the only argument progressive enhancement has and then chastises the progressive enhancement community for not spending time fighting for equitable Internet access and net neutrality and against things like censorship (which, of course, many of us actually do).

In his closing section, Josh talks about progressive enhancement moderates and he quotes Matt Griffin on A List Apart:

One thing that needs to be considered when we’re experimenting … is who the audience is for that thing. Will everyone be able to use it? Not if it’s, say, a tool confined to a corporate intranet. Do we then need to worry about sub-3G network users? No, probably not. What about if we’re building on the open web but we’re building a product that is expressly for transferring or manipulating HD video files? Do we need to worry about slow networks then? … Context, as usual, is everything.

In other words, it depends, which is what we’ve all been saying all along.

I’ll leave you with these facts:

- Progressive enhancement has many benefits, not the least of which are resilience and reach.

- You don’t have to like or even use progressive enhancement, but that doesn’t detract from its usefulness.

- If you ascribe to progressive enhancement, you may have a project (or several) that aren’t really good candidates for it (e.g., online photo editing software).

- JavaScript is a crucial part of the progressive enhancement toolbox.

- JavaScript availability is never guaranteed, so it’s important to consider offering fallbacks for critical tasks.

- Progressive enhancement is neither moral nor amoral, it’s just a smart way to build for the Web.

Is progressive enhancement necessary to use on every project?

No.

Would users benefit from progressive enhancement if it was followed on more sites than it is now?

Heck yeah.

Is progressive enhancement right for your project?

It depends.

Footnotes

Of course, last I checked, over 55% of the Web was in English and just shy of 12% of the world speaks English, so… ↩︎

Comments

Note: These are comments exported from my old blog. Going forward, replies to my posts are only possible via webmentions.This is an excellent post, and it really did take me about twenty minutes to read, even with a screen reader. Also, I see you're supporting webmentions and that's excellent. I haven't had time to read the post you're responding to, but I saw the link making the rounds on Twitter and was wondering why someone would take issue with PE. After all, if we say that PE is essentially without foundation, what then do we offer as an alternative that does all the things PE is supposed to do?

Thank you! I agree. It’s quite perplexing and I don}t think the JS community and the progressive enhancement community should be at odds. We can do such much good together.

One of the most thoughtful pieces on this that I've ever read. I'd love to have you on our podcast to talk about this!

Thank you! I'd love to. Drop me a line on the contact form.

Thanks! I certainly will!

So good. Thanks for taking the time to write it out, Aaron.

Thanks Ben!

An excellent rebuttal to the other piece. I'm still bouncing back and forth between many of the arguments, but so far these definitely seem more well thought-out than the ones from the piece you're responding to. Long but very good read!

Well said, Aaron. It's an eloquent comment I wish I could have written.

I'm sure Josh wasn't being controversial for the sake of it but PE is (primarily) a pragmatic and defensive development approach. As a non developer, would he demand that others use a specific language, database, OS, framework or editor? I hope not.

Unfortunately, the biggest opponents of PE are those who have never tried it.

Very well balanced and fair response Aaron. I read Josh's article a few days ago and the central premise of trying to pull the rug out from under PE based on the fact he believes it to be purely moral philosophy was somewhat bewildering! I mean it just isn't about that for the most part! Your response sums up everything I thought so thanks for taking the time to write it!

Thanks!

This was good, especially since I had happened to read Josh's article a couple days ago, and while he had a few good points, I think he was surprisingly (given his declaration at being a master logician) over-superlative and had too narrow a focus. What I saw is that he set up a straw man ("All hardline-PEers think everything on the web should be accessible to everyone") and then proceeded to attack that straw man. If that statement did indeed describe the position of you and others who support PE, then I would say he did a good job of rebutting that argument. But since it is just a straw man, his arguments aren't really valid or useful to the conversation of PE.

Agreed.

Very good article. I found it particularly convincing on the practical case. The only thing I'd say is that the article it responds to makes clear that if you're not a hardliner he's not talking to you. I do know some hardliners. I asked one of them "what if it's just a few days on a toy javascript project like a restaurant bill calculator with a twist?". The reply was "Use it yourself but if you put that online you are actively discriminating against people and it's not what a decent person would do."

To take things out of reductio ad absurdum, I think a lot of this comes from the huge difference between working for a major player like Microsoft or CNN and a small company. Institutions like these are parts of the social fabric and people rely on them. On the other hand, a startup with 2 or 3 developers often needs to enter a crowded market and distinguish itself by doing something particularly beautifully that is already serviced perfectly reasonably elsewhere.

They hope to use rapidly running out money to delight a small enough proportion of the world's internet users in time to make their business viable. They will likely fail. In the meantime they've provided something hopefully positive for a small number of people and were simply ignored by a much smaller number of lucky others who went elsewhere because of technical issues.If for a comparable cost they could have taken a PE approach they should have done. Perhaps their business is doing a lot of server intensive data scraping and surfacing the knowledge. This is what HTML and CSS is for. Hard to render the graphs without javascript but do some and no need for a single page app.

But probably a majority of these startups *are* software as a service and attempting to build compelling software without a real, client programming language is really hard. In many cases unfeasible because you'd have to make HTML fallback for all of it for the software to be at all usable. i.e. a separate, second architecture which is user unfriendly enough to rob the company of any selling point. Ironically, the main reason these companies are using javascript rather than writing a windows or mac app as they once would have done is for the sake of inclusiveness!

They'll think about their Honda buyers with old blackberries when they've sold their first Honda. And it better be damn shiny for that to happen.I guess the main thing I'd note is that yes, big, important websites who have serious leverage and therefore responsibilities and the resources to meet them.

A huge majority of internet traffic goes to these players but not it's not where all the developers work.Hey Aaron, I got a few questions, but first. When clicking your anchor links for the headings, my computer suddenly starts to speak. Is this a bug? In my 15 years on the internet I have never experienced something like that. If it is not a bug, what is the logic behind that feature? I am not blind nor unable to see the "Copied" text that flashes.

Second, you wrote:

"How many Single Page Apps have you see out there that really didn’t need to be built that way? "

Does that really matter? Saying this basically means "you should not do it that way". Single Page Apps is a technique that works fine within the frames of HTML, CSS and JavaScript. In what situations is Single Page Apps not okey? Of course they did not NEED to be, but why not? They have every "right" to create their application this way.While Josh's article takes one extreme side of the story, your take the other. There is a fine middle ground here that noone of you mentions. I know that websites for the government in the country where I live are developed in typical JavaScript first solutions (like React with no fallback). I live in a country where Internet access is available everywhere, and the mobile nets are fast and reliable. Turning off js or having a corrupt bundle download will simply result in the webpage asking you to enable js and/or refreshing your site, and I think this is perfectly reasonable. Why should they bother having to develop solutions for people where this is an issue? Of course, if they had to develop a similar solution for people in countries where there is terrible mobile nets and internet is slow/expensive, perhaps they would do things different, but that is not the case. Does it make sense to use PE only where it is relevant, and fully relay on the web stack where PE is not necessary, or is this "wrong" interpretation of how PE works?

Re the voice: Yes. I have been playing around with the speechSynthesis API :-) This whole site is a bit of a Work in Progress, so some things may come and go as I toy with ideas.

In terms of SPAs: I’m not trying to imply there’s only one way to build a site; there isn’t. What I’m saying is that we often choose tech based on *our* needs/desires (as developers) as opposed to the project’s needs or the end users’ needs. It something I’ve seen over and over for the last 20 years and it happens in small teams all the way up to large orgs. It’s also not a problem unique to JS frameworks. The number of large companies that have bought into a CMS because of a good sales pitch only to discover they had to fight to get it to meet the actual needs of the project… well, I’d need at least as many arms as Shiva to count them with my fingers :-)

Also, FWIW, I don’t agree that I have taken an extreme position here. I mentioned numerous times that PE isn’t for everyone nor is it right for every project. "It depends." But since you asked about your situation, I’ll address that. You said " I live in a country where Internet access is available everywhere, and the mobile nets are fast and reliable. Turning off js or having a corrupt bundle download will simply result in the webpage asking you to enable js and/or refreshing your site, and I think this is perfectly reasonable." Assuming all of this is true, are you 100% sure there are not "dead zones" of mobile coverage? No tunnels to speak of? No subways or "urban canyons"? Even if that were the case, if JS fails in a non-obvious way—for instance handling form submission of a contact form, to take a simple example—your users could get pretty annoyed. In fact, this was an issue I ran into earlier.

I’m on the latest Chrome version on my high-end MacBook Pro, connected to 200 MB/s fiber and I hit a page with a form I needed to fill out. I did so and hit submit and the form took me to an error screen. I had typed an extremely long message. Thankfully, I have gotten in the habit of copying messages before I hit submit… I had to return to the form, refresh the page and re-fill the whole thing just to send my message. It wasn’t clear to me that some bit of invisible JS (probably used to determine my "humanity") hadn’t run properly and if I had not taken steps to back-up my message, I would have had to re-write the whole thing. Or I might have just given up. That dependency failing caused me to have a bad customer experience. Wouldn’t it have been better for the form to still submit properly without the JS and my message simply get flagged for a human to check on the back-end before determining if it’s spam?

To me, it all comes down to customer service and wanting to provide the best experience I can, even if it isn’t the ideal one.

To answer your final question: "Does it make sense to use PE only where it is relevant, and fully relay on the web stack where PE is not necessary, or is this "wrong" interpretation of how PE works?"

If your "where" is geographically-speaking, I think that’s a risky decision as people routinely move around the world and their situation changes, For instance, you may work on a government site for France, but a French citizen may need access from Morocco where the network situation is different. They may even be using an Internet Cafe in which case the browser & OS may be older.

If your "where" is developmentally-speaking, it depends. You can draw lines like "support vs. optimization" where you have a very low-fi experience (no JS, basic CSS) for older devices across the board and then only spend your time optimizing for a modern subset of browsers/devices. This limits the "long tail" of progressive enhancement and is a much more pragmatic approach. That’s how I tend to operate. You could even take it further and opt to include certain UI enhancements to only the most cutting edge devices (as you heard with my speechSynthesis experiment). As long as you have that basic fallback, you’re still good to go.

If anyone makes a moral argument for progressive enhancement they're wrong. Progressive enhancement for the wrong features or project is a waste of productivity, time and money. Blanketly doing progressive enhancement on every feature is pretty silly. Logically some features need progressive enhancement and making them so has a relatively high return to cost. If you have a lead form that doesn't work without javascript, you're doing it wrong. But if you're potentially making decisions for progressive enhancement which ultimately cost the site in total revenue, you're doing it wrong too.

You’re spot-on. Like I (and many others) have said repeatedly, it depends. It always depends and some of the factors you bring up are good things to keep in mind. You can easily undermine the primary purpose of your page by adding unnecessary dependencies that may go unmet. Similarly, you can spend days progressively enhancing a feature like drag and drop so it works all the way back to IE6 and you’re most likely wasting effort that could be better focused on features and browsers that actually matter.

Webmentions

twitter.com/aarongustafson…

twitter.com/AaronGustafson…

twitter.com/AaronGustafson…

twitter.com/AaronGustafson…

Likes

Shares